Modelingmarked temporal point process using multi-relation structure RNN

Published in Cognitive Computation, 2019

Recommended citation: Hongyun Cai, Thanh-Tung Nguyen, Yan Li, Vincent W Zheng, Binbin Chen, Gao Cong, Xiaoli Li (2019). In Cognitive Computation

Paper Link: https://link.springer.com/article/10.1007/s12559-019-09690-8

Abstract

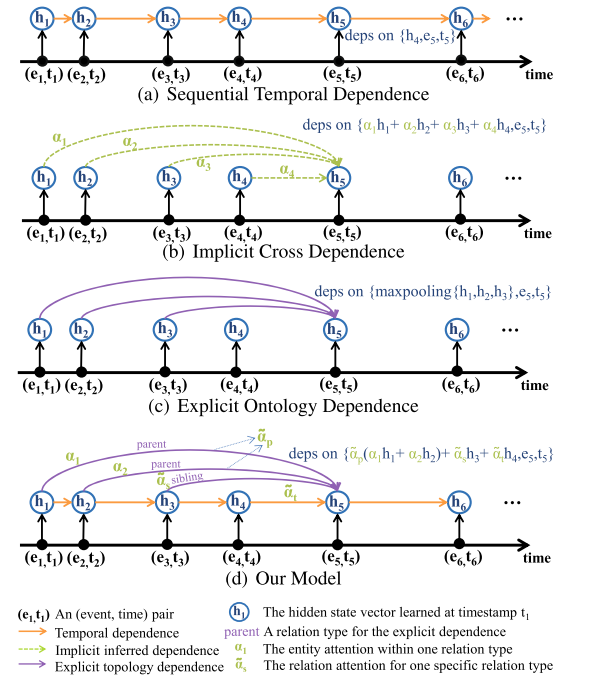

Event sequences with marker and timing information are available in a wide range of domains, from machine log in automatic train supervision systems to information cascades in social networks. Given the historical event sequences, predicting what event will happen next and when it will happen can benefit many useful applications, such as maintenance service schedule for mass rapid transit trains and product advertising in social networks. Temporal point process (TPP) is one effective solution to solve the next event prediction problem due to its capability of capturing the temporal dependence among events. The recent recurrent temporal point process (RTPP) methods exploited recurrent neural network (RNN) to get rid of the parametric form assumption in the density functions of TPP. However, most existing RTPP methods focus only on the temporal dependence among events. In this work, we design a novel multi-relation structure RNN model with a hierarchical attention mechanism to capture not only the conventional temporal dependencies but also the explicit multi-relation topology dependencies. We then propose an RTPP algorithm whose density function conditioned on the event sequence embedding learned from our RNN model for cognitively predict the next event marker and time. The experiments show that our proposed MRS-RMTPP outperforms the state-of-the-art baselines in terms of both event marker prediction and event time prediction on three real-world datasets. The capability of capturing both ontology relation structure and temporal structure in the event sequences is of great importance for the next event marker and time prediction.

Summary